Query vs SQL vs Query Def

Last Updated 27 Feb 2019 Difficulty level : Moderate

Section Links:

Preamble

Append Only

Append + Update

Append + Update From Lookup Table

Update From Lookup Table

Append + Both Updates

Multiple Action Procedure

Conclusions

Feedback

This is the sixth in a series of articles discussing various tests done to compare the efficiency of different approaches to coding.

Example databases are provided in each case so the same tests can be done on your own workstations

Preamble Return To Top

Like many developers, when I started using Access some 20 years ago, I used queries and macros before moving across to using SQL statements in code.

When I made the change, I was informed how much more efficient it was to run SQL in code.

At some point after that, I read that was nonsense and that queries run faster because they are pre-optimised.

And, just to complicate things further, others said using query definitions was better than either of those.

Those expressing opinions for each view included experienced developers, some of whom were MVPs

Faced with this disparity, I long ago decided to continue mainly using what worked best for me – SQL statements in code though others will have their own preferences.

Here’s one forum thread discussing the different approaches and with differing opinions: SQL or QueryDef in VBA code?

Of course, with modern processors, the question is much less important than when I started using Access. However, I’ve never yet read a definitive answer to the question.

I am well aware that the best approach may depend on the nature of the query (or equivalent).

In an attempt to get a definitive answer, I have recycled my speed comparison test application for another set of tests.

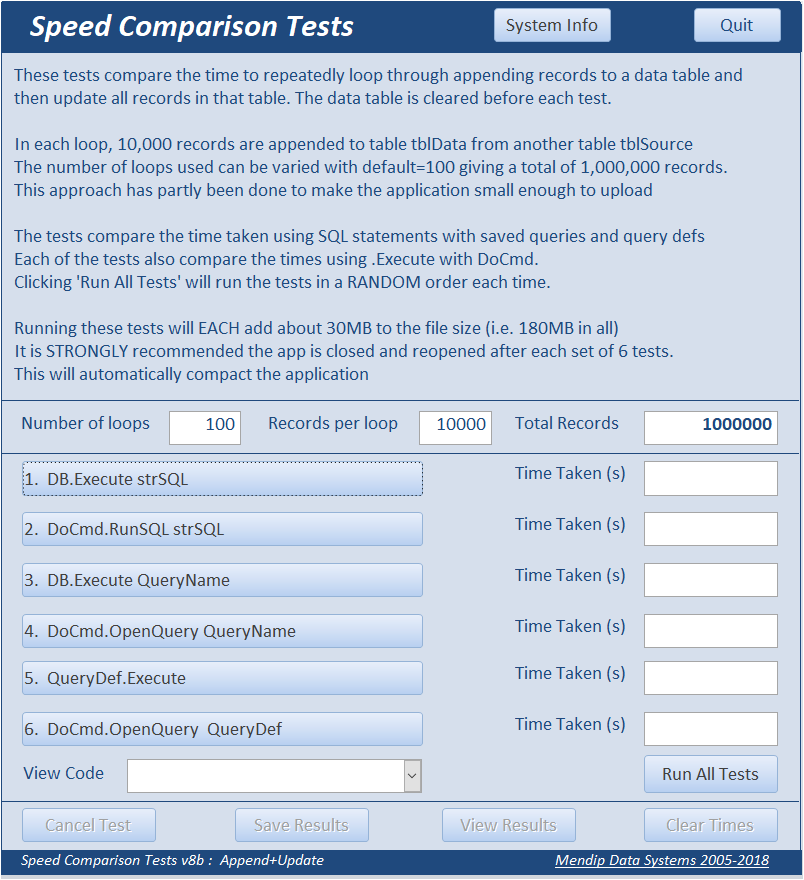

This time, I compared the time to add 1 million records to a data table in 100 loops of 10000 records appended from a source table and then to run update queries.

The tests compare the times using SQL statements, saved queries and query definitions

For each method, I also compared the use of db.Execute with DoCmd therefore giving 6 tests in all.

The app also 'randomly' varies the test order when running all the tests in turn.

The 6 tests each add 1,000,000 records after deleting those already present. The database grows from about 1.3MB to 180MB after running all 6 tests.

As a result, I decided to compact and reopen the application after each test.

I believe compacting forces Access to redo any saved query plan.

I have also tried running individual tests several times in a row to see what effect that has.

The reason for appending records in repeated loops is partly to reduce the size of the test database.

However, it also means than Access can optimise the query execution plans as appropriate for each method.

In the end I ran several variations on this test for reasons explained below.

Each test was run 20 times and average times calculated

a) Append ONLY Return To Top

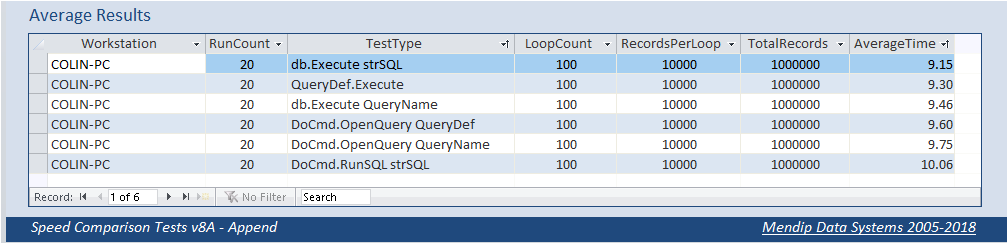

In the first set of tests, the times to append the 1,000,000 records were compared.

Although the difference was relatively small in my tests, it was very consistent in repeated testing.

Using db.Execute was ALWAYS faster than DoCmd.

DoCmd is an application method which needs to be interpreted before it can be run.

The slowest time was about 11% greater than the fastest time

However there was only about a 3% difference between the 3 execute methods

For these tests, running a SQL statement was fastest followed by QueryDef with a saved query slowest.

However for the DoCmd version, using a SQL statement is by some distance the slowest.

Click to download: Speed Comparison tests 8.2a - Append Approx 1.5 MB (zipped)

b) Append + Update Return To Top

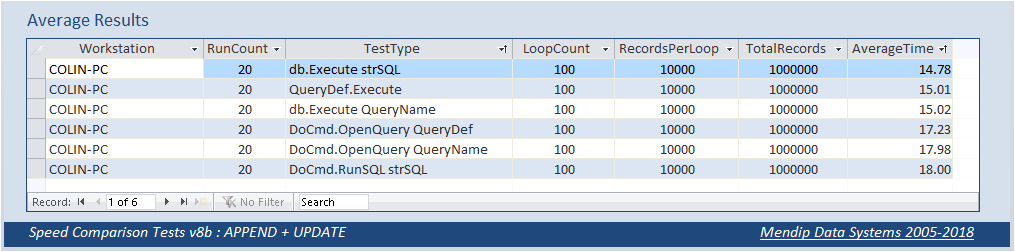

The first set of tests were very simple and it could easily be argued that this wasn’t a typical everyday situation.

So I decided to follow up by running a simple update on the entire data table of 1 million records.

The update was also very simple just changing the value of all records in a text field to a different value

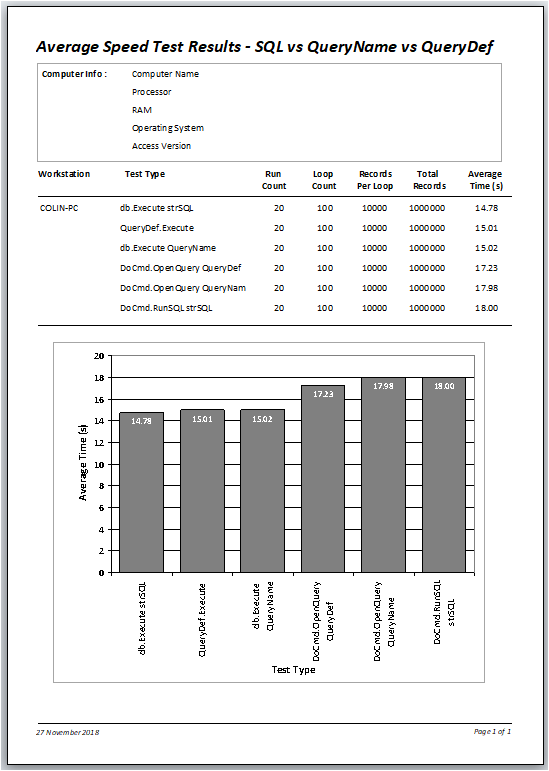

The relative order was the same as in the first set of tests but there was a much larger difference between the average times with the slowest times almost 22% more than the fastest.

However the results fell into two distinct groups with only 1.6% difference in the 3 execute methods

Here are the same results displayed in a report:

Click to download: Speed Comparison tests 8.2b - Append + Update Approx 1.5 MB (zipped)

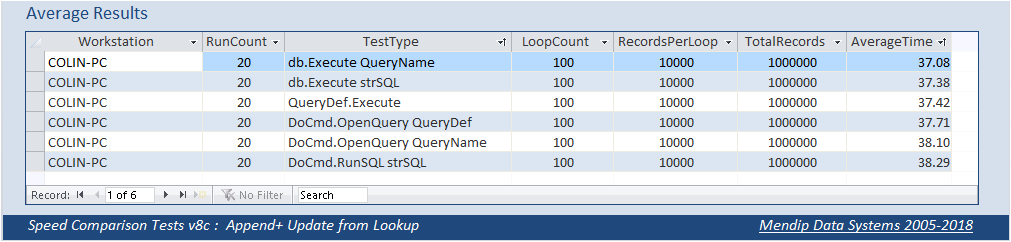

c) Append + Update from Lookup table Return To Top

Next, I decided to run a more complex update query where the text field value was updated to one of 10 possible values from a lookup table. Inevitably this significantly increased the time for each of the tests and I anticipated the differences in times would be further increased.

However, that wasn’t the case.

The differences between the fastest and slowest times were reduced to about 10%

Also, for the first time, running a saved query was faster than running a SQL statement

Click to download: Speed Comparison tests 8.2c - Append + Update from Lookup Table Approx 1.5 MB (zipped)

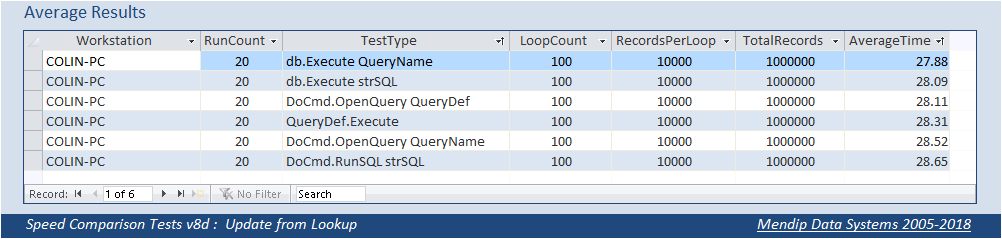

d) Update from Lookup table Return To Top

For obvious reasons, I decided to repeat the previous test but this time only timing the update part.

The saved query name was again fastest but there was only a 3% overall variation between the results.

An unexpected result was that for query definitions, using DoCmd was faster than Execute

Click to download: Speed Comparison tests 8.2d - Update from Lookup Table Approx 1.5 MB (zipped)

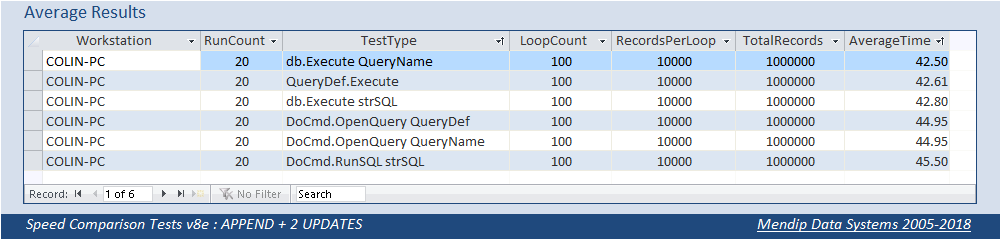

e) Append & Both Updates Return To Top

In this example, both sets of update query were included in the results.

Saved queries were again fastest with query definitions slightly faster than using a SQL statement

However there was only 7% overall variation in the results and around 0.7% between the 3 execute methods

Click to download: Speed Comparison tests 8.2e - Append + both Updates Approx 1.5 MB (zipped)

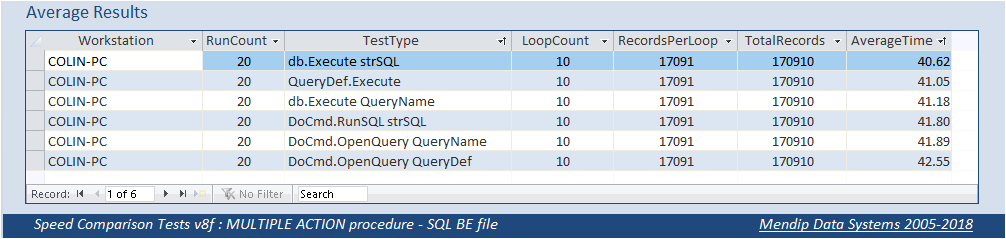

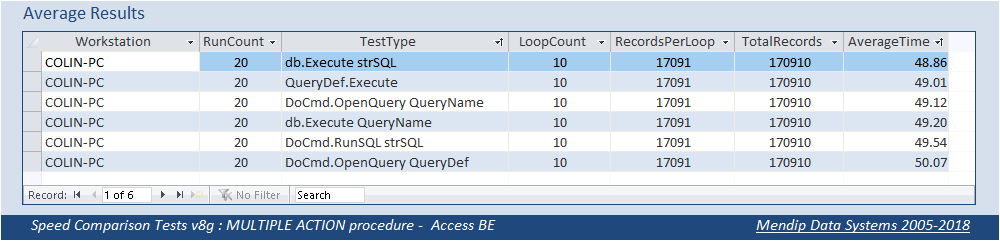

f) Multiple Action Procedure Return To Top

Finally I decided to run a procedure from one of my production databases with a SQL Server BE.

The procedure included reference to 8 separate SQL tables.

In the original procedure, a SQL table GradeAverages is updated after each school reporting session.

As I didn’t want to modify the 'real' data, I modified the procedure to update a local table instead.

The modified procure included 3 make table queries (to create temp tables), 1 append query and 3 update queries followed by 3 statements to delete the temp tables.

Each procedure was run 10 times in a loop in order to optimise the query execution plan for each test.

There was less than 5% variation between the different tests and only 1.3% between the execute methods.

I would have expected greater variation between the different methods.

However, despite the relative ‘complexity’ of the procedure, using executing SQL statements was easily the fastest method.

So that I could upload this version of the test, I copied all 8 SQL tables used to a separate Access BE file.

No changes were made to the table structures, indexes etc.

For my own interest, I ran the same tests again.

The overall order was almost unchanged but all times were about 20% slower than using the SQL BE file.

This result was somewhat unexpected and may merit a separate investigation.

Until now, I had only ever compared performance before & after upsizing to SQL.

Many users are disappointed by the performance when converting tables to a SQL BE.

The reality for many is that performance after upsizing is often slower than before. In order to improve performance it is necessary to make changes which utilise how SQL Server retrieves data.

That may form the basis of a separate article in the future

Click to download: Speed Comparison tests 8.2g - Multiple Action Procedure Approx 34 MB (zipped)

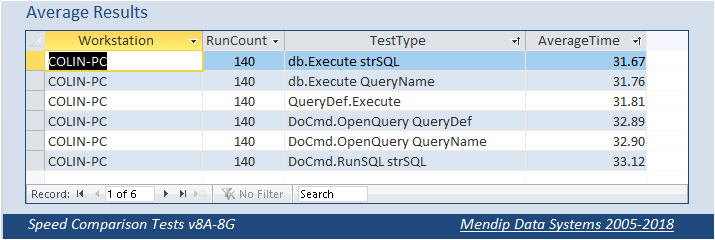

Conclusions Return To Top

Clearly it isn’t possible to make sweeping conclusions from all of these tests.

As each of the 7 sets of tests were performed the same number of times, it might be interesting to view the overall average times by each method.

Notice that the order is reversed for the 3 tests using DoCmd compared to Execute

There is less than 5% variation between the 6 methods overall in these 140 tests on each.

Furthermore, there is less than 0.5% difference between the 3 execute methods

Of course, this isn’t scientific as each of the 7 versions are performing different actions.

However the main trends from all the above test are that:

1. Using Execute methods are almost always faster than using DoCmd. In certain cases up to 20% faster though usually much less than that

2. For simple queries, dbExecute strSQL is generally fastest and DoCmd.RunSQL strSQL is slowest

3. For more complex queries, executing a saved query is usually fastest as Access can optimise the query execution plan.

However the variation between the different tests is often smaller.

4. For procedures involving a number of action queries, executing a SQL statement may once again be fastest though once again the differences are very small.

5. Executing query definitions appears to never be the fastest method in these tests

6. Executing queries based on SQL tables may be faster than doing the same queries on linked Access tables

Overall, however, in most cases, the differences aren’t that significant and other factors may be more important in determining the best method to use.

For example, if you prefer keeping all code in one place to allow an overview and easy editing, using SQL statements may be best. Conversely if you wish to keep as much as possible separate from VBA code, using saved queries works well

Phillip Stiefel has expressed similar views in more detail:

The results of this comparison should not be the main factor in choosing one approach over any other.

More important in my opinion, is something not related to performance. If you use ad-hoc SQL in your VBA-Code the SQL’s logic is visible right there in the code. You might find this more convenient because it saves you the context switch of looking at the query when trying to comprehend the whole logic of a particular piece of your application.

On the other hand, using stored queries and referencing those in the code will decouple the query logic from the calling code. This allows you to change query logic without touching the code at all. Having this separation might be beneficial when maintaining the application.

This is not too big a factor in pure Access development. However, when you develop client-server-applications with a SQL-Server-Backend, this can be huge benefit. Then you are able to change a lot of logic by modifying views and stored procedures on the server without ever touching and re-deploying the code in the frontend application. This forms a real abstraction layer between the data storage (tables) and the front-end-application. A huge benefit for long-term maintenance of an application.

Additionally, the compilation of queries and reuse of query plans becomes a much bigger factor if it does not happen only on the client for a single user, but on the database server for all users of your application.

Feedback

Please use the contact form below to let me know whether you found this article useful or if you have any questions/comments.

Do let me know if there are any errors

Please also consider making a donation towards the costs of maintaining this website. Thank you

Colin Riddington Mendip Data Systems Last Updated 27 Feb 2019

Return to Speed Test List

Page 6

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

Return to Top

|

|

|